The organizations¶

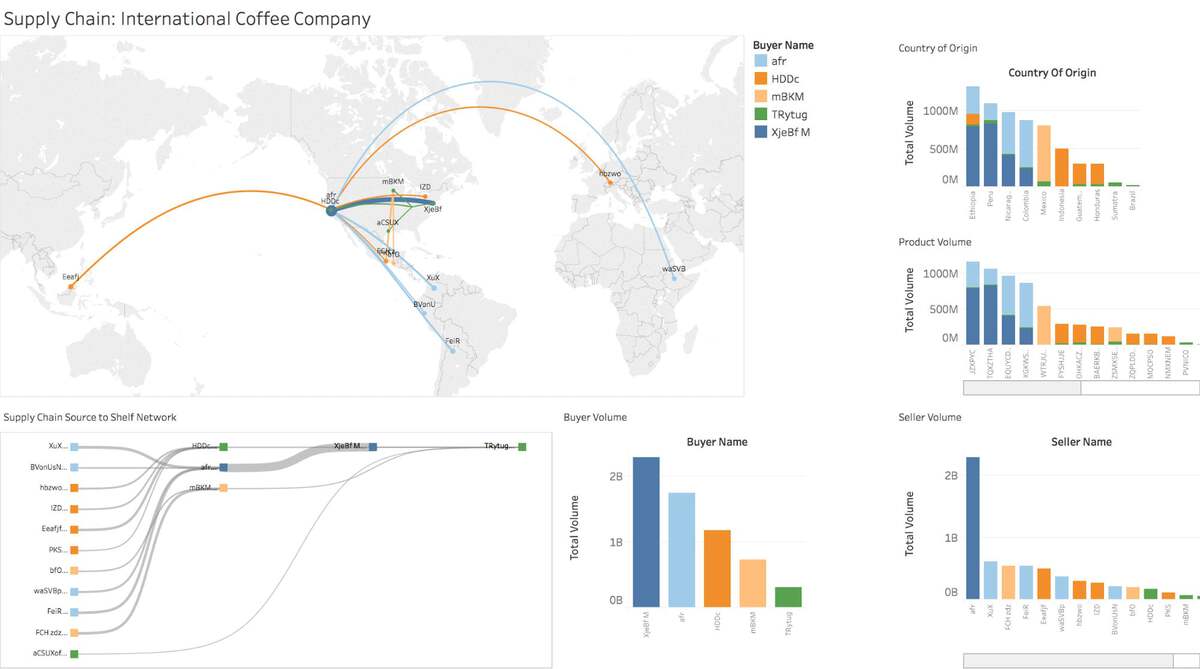

The Pan African Programme at the Max Planck Institute for Evolutionary Anthropology aims to understand the evolutionary and ecological drivers of chimpanzee cultural and behavioral diversity. They collect systematic data on chimpanzee populations from over 40 research and conservation sites across Africa.

WILDLABS provides a platform for bringing together the world's conservation technology community to accelerate the development and deployment of conservation technologies.

The challenge¶

Camera traps have become essential tools for wildlife conservation, generating massive datasets that enable researchers to study animal behavior, track population changes, and assess conservation impacts. However, these motion-triggered cameras create an overwhelming volume of data—a single deployment can produce tens of thousands of videos and images, with up to 70% containing no animals at all. Manual review of this footage represents a critical bottleneck, consuming valuable researcher time and resulting in an underutilization of camera trap data.

The conservation community needed an accurate, accessible solution to identify animals in camera trap data that could work across diverse habitats and species while being usable by researchers without programming expertise or specialized computing hardware.

The approach¶

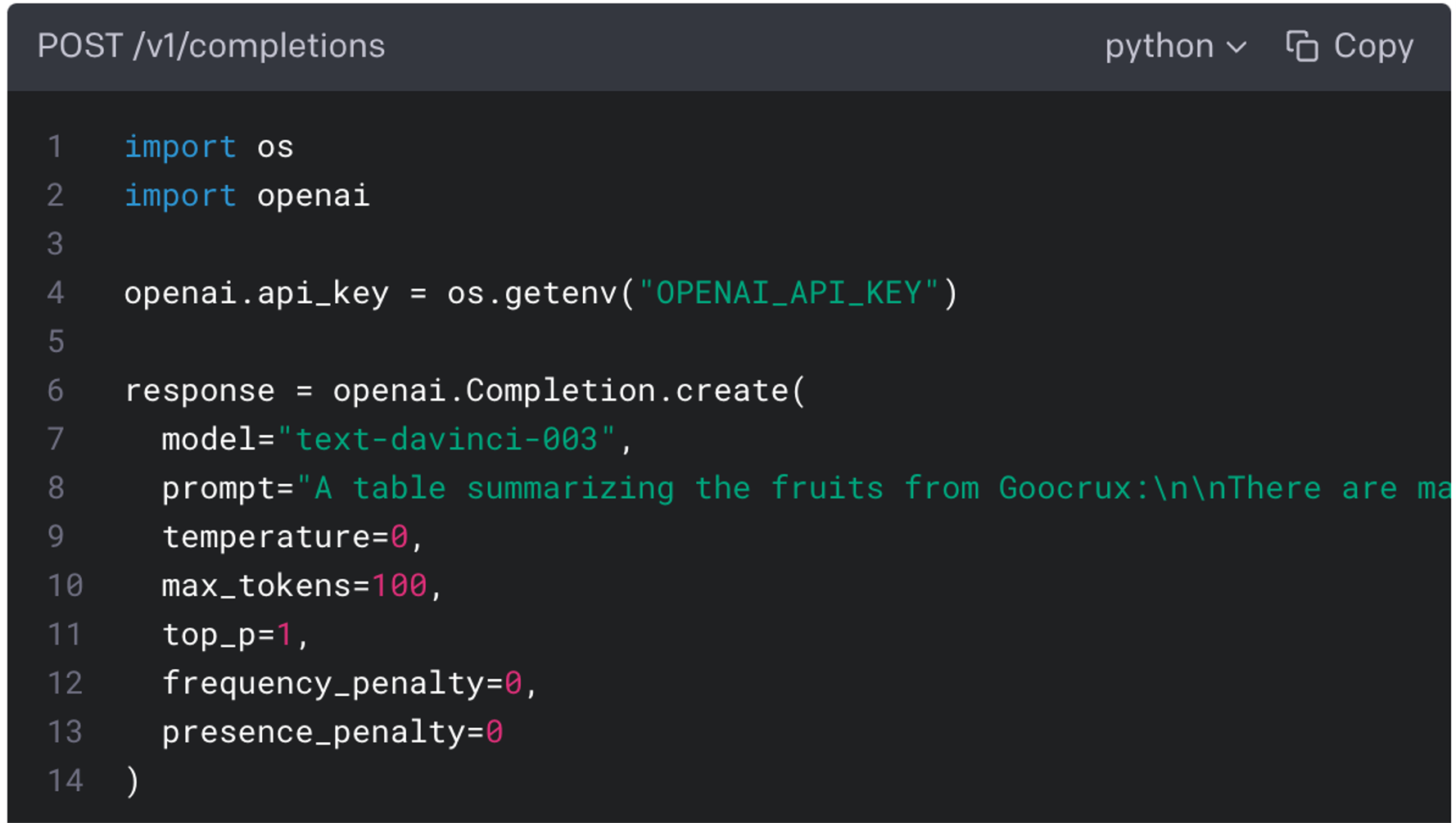

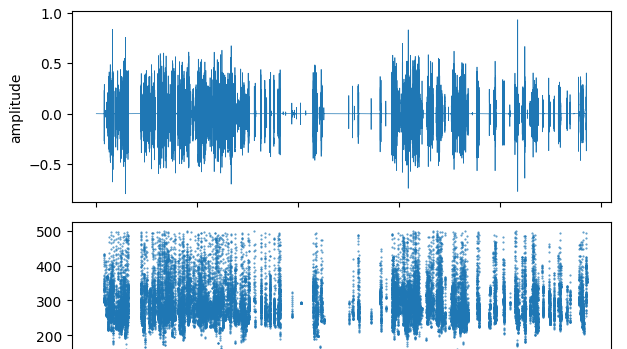

DrivenData collaborated with conservation researchers through a series of machine learning competitions to develop automated wildlife identification models. In the Pri-matrix Factorization Challenge, participants trained machine learning models to classify 23 species from nearly 2,000 hours of annotated camera trap footage. The follow-up Deep Chimpact Challenge focused on monocular depth estimation—inferring the distance between the animal and the camera—which is an intermediate step for estimating population abundance using distance sampling methods.

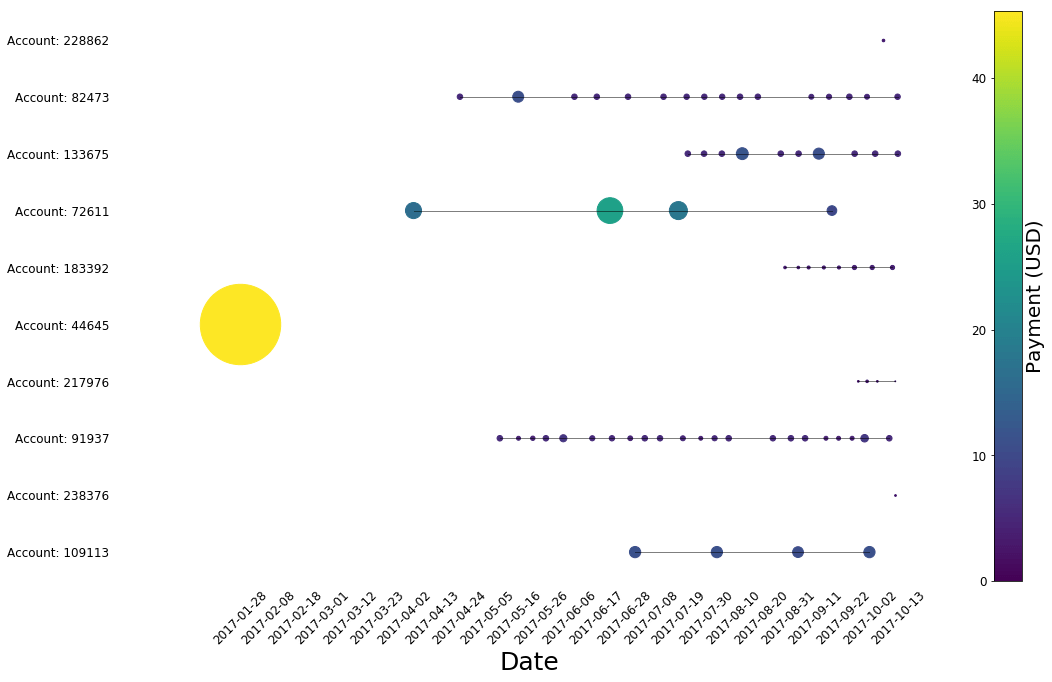

Building on the winning algorithms, DrivenData created Zamba, an open-source Python package that uses machine learning and computer vision to automatically detect and classify animals in camera trap images and videos. Video models were trained on 250,000 labeled videos from 14 countries across Central and West Africa, and image models were developed using over 15 million annotations from 7 million images across 20 global datasets.

To make these capabilities accessible to non-programmers, the team developed Zamba Cloud, a no-code web platform that runs machine learning workloads on managed cloud infrastructure.

The results¶

Zamba is unique in its ability to process camera trap video data. Unlike most existing tools that only support image data, Zamba enables automatic species classification for both still images and videos, as well as depth estimation from videos. Zamba also uniquely provides support for custom model training through fine-tuning. Researchers can easily adapt the base models for their specific species and habitats rather than relying solely on pretrained models. This makes Zamba useful across the wide variety of environments in which camera traps are deployed.

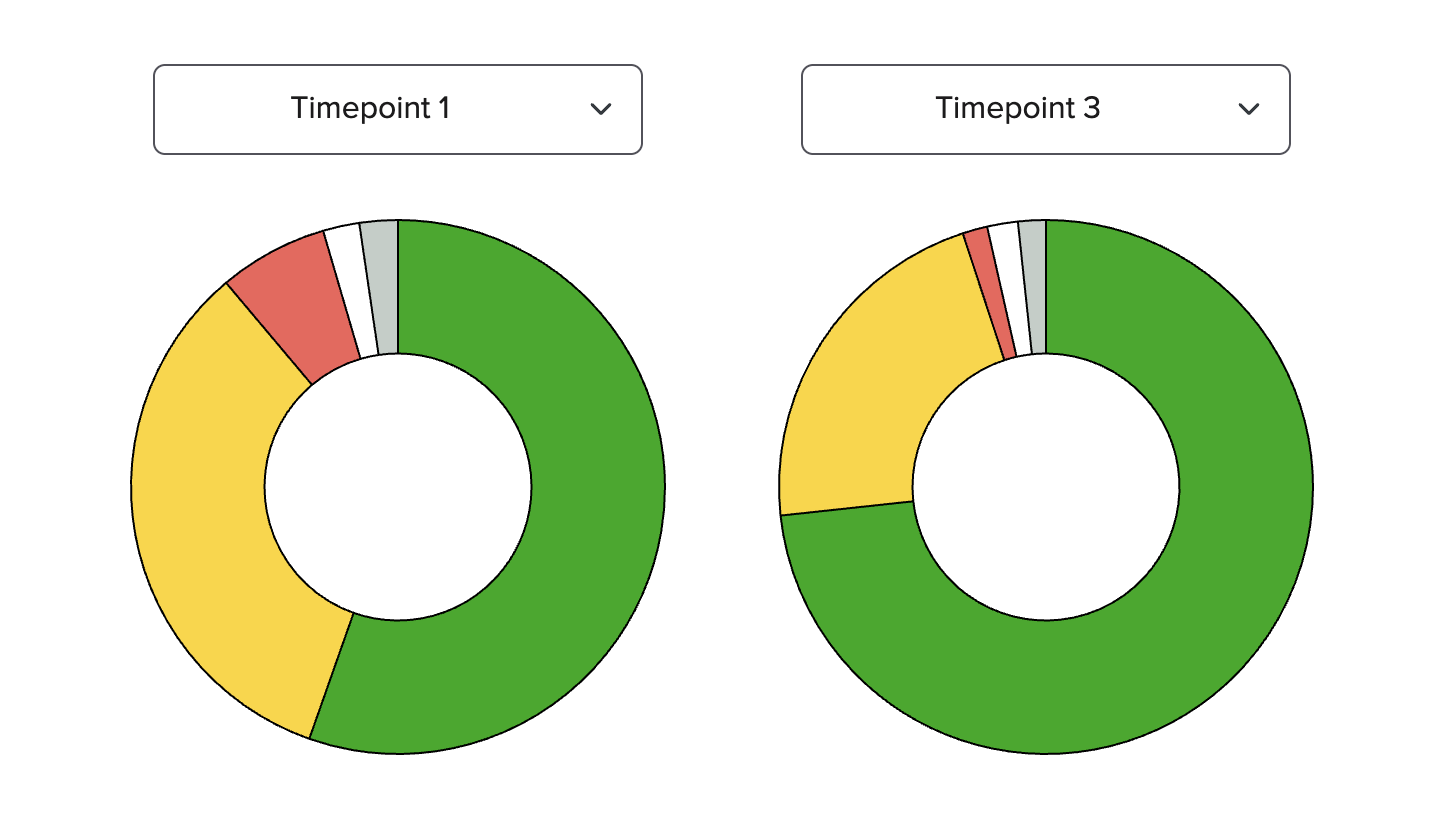

Moreover, Zamba Cloud's no-code custom model training capability democratizes access to sophisticated AI tools. Over 300 users from around the world have used Zamba Cloud to process more than 1.1 million videos.

By enabling conservationists to efficiently analyze large datasets and train models tailored to their specific ecological contexts, Zamba advances wildlife monitoring, research, and evidence-based conservation.