Introduction¶

The Concept to Clinic challenge asks competitors to help adapt research-oriented machine learning algorithms to practical clinical settings. Specialists from the worlds of data science, software development, and user interface design are contributing changes to the project's application codebase while competing for points and prizes.

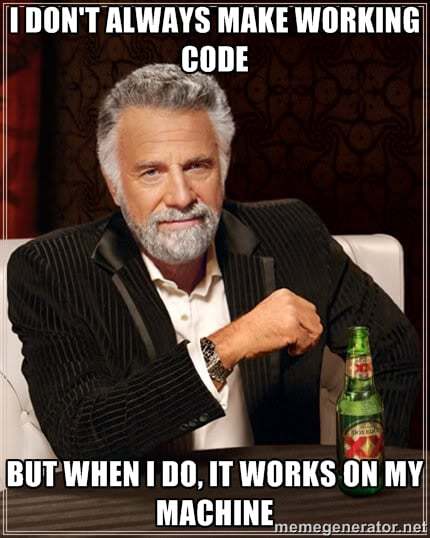

The segmentation of competitor specialties means that experts across these different disciplines need to be able to rely on each other's code working "on their machine" — dependencies and all.

For example, an expert in classification algorithms still needs a properly functioning front-end interface in order to interact with the results of their model training. Similarly, the backend database code needs to work seamlessly with the classification algorithms in order to usefully fulfill the goals of the application. A user experience designer doesn't want to (and shouldn't have to) spend an afternoon setting up a machine learning environment for Keras with a Tensor Flow backend, or making sure they have the right version of Pandas. Similarly, users shouldn't have to read the Postgres installation documentation for the database to get it running with a user login and the correct port open — this should all just work!

Ensuring easy setup of the application stack and efficient segmentation of the various components of the Concept to Clinic challenge is precisely where Docker excels, especially when used with Docker Compose, its tool for declaring and orchestrating multiple services. In this post, we'll dive into some background on Docker and explain how we're using it in the Concept to Clinic challenge.

What is Docker?¶

Docker provides an elegant solution to what many like to call the "But it works on my machine!" problem.

First, what Docker is not. Docker is not a virtual machine but instead relies on OS containers, which are similar to virtual machines but are much lighter in terms of computational resources. Containers don't require a guest operating system. Rather, they share the host machine's resources but live in completely isolated user-spaces which allows the containers to be fast while maintaining a strict separation from the host OS.

Docker is a system for deploying containers which can be frozen as artifacts called Docker images. Software developed as a Docker image is intended to work on any other machine with Docker. The goal is for Docker to provide an identical blank slate for running applications, even on vastly different computers and operating systems with environment setup and management is entirely handled by Docker, "under the hood." Particularly in cases where interconnected services depend on relatively complicated configuration, this makes application deployment much, much simpler than non-containerized solutions.

As the company behind the Docker project puts it, Docker allows you to "Build, Ship, and Run Any App, Anywhere." Furthermore, Docker images are completely shareable across any machine running Docker via the cloud repository Docker Hub. If it sounds too good to be true, just wait– it gets even better!

What is Docker Compose?¶

A smart development process can use Docker to segment each portion of the application architecture into loosely coupled containers called services. Docker Compose is a tool for defining and controlling interactions between various services in an application.

Usually, if our app has multiple services then we want to run them together so that they can communicate with one another (e.g. with HTTP requests to APIs, database connections, or RPC calls). In such a scenario with multiple services, each developer needs them to communicate but does not want to spend precious time setting up a portion of the stack she will never edit. Compose lets us do this with a single command: docker-compose up. With Docker Compose, application services are efficiently linked and associated to a single Docker machine.

In the Concept to Clinic challenge, this means that the ML prediction service, the backend API, and the frontend web service can each be developed independently, yet the full stack can easily be built and run deterministically. This means that after cloning the Concept to Clinic repository and installing Docker with Docker Compose (check here to see if you need to install Compose separately), it takes only a couple lines of code to have the application up, running, and ready for your winning improvements. Before we get into the specific code, let's take a closer look at how Docker compose makes all this possible.

At the heart of the Docker composition process is the compose configuration file. This YAML file serves many functions, including telling Docker which version of the engine to run for the application, listing the service containers to be run within that Docker machine, and defining various container customizations within each service, such as setting environment variables or specifying dependencies links between the services. An application's compose configuration file gives a bird's eye view of the software's basic components, as well as configurations of those components and dependencies between them.

docker and docker-compose commands with sudo in order to run them as root. See this discussion for more information.

In the Docker Compose documentation, the default name for this configuration file is docker-compose.yml. As mentioned above, this YAML file contains at a minimum key-value pairs for which version of the Docker engine to run as well as the services (containers) in the application stack. This naming convention allows a user to launch their application using the very simple command docker-compose up, without explicitly referencing the configuration file.

However, often times it is useful to give the configuration file a more specific name, such as local.yml or production.yml. One reason for this is that different configuration files may be desired for different contexts. For example, local.yml may configure containers for development, with extensive debugging tools and testing suites loaded on launch. In contrast, the production configuration specified in production.yml won't set up any testing software, may point deployment services to different servers, and may use many different environment variables. With Docker Compose, switching between the development and production versions of an application is as easy as specifying a different configuration file in the docker-compose command. The only difference is the added -f option and specification of the YAML, e.g., docker-compose -f local.yml up.

The Docker Compose configuration file for the Concept to Clinic project is called local.yml. (This means there's some extra typing each time a command is run, but we feel it's good practice to be extra explicit about which environment you want to run commands for, especially when the projecy has a production settings file if a demo app is deployed to the cloud.)

To build the application stack after cloning the project repository and installing Docker Compose, we simply run docker-compose -f local.yml build from the root directory of the project. We can then boot the application with docker-compose -f local.yml up. The first command builds the services listed in the configuration file. The second command attaches each service to your terminal and aggregates the output their outputs into a unified application. A schematic of interacting Docker containers within the Concept to Clinic application is shown below.

With the above high-level explanation of the Docker composition process in mind, let's consider some specific aspects of the service configuration file and Dockerfiles in the Concept to Clinic software architecture.

Compose Services in the Concept to Clinic Challenge¶

In this post, we will focus on the development configuration file, local.yml. There are three service containers in the Concept to Clinic application, each listed as a configuration key in local.yml (which can be viewed here). The three service keys are postgres which refers to the database service, prediction for the machine learning service, interface for the backend API, and vue for front-end web development server.

Using Docker Compose, the outputs of these container services are aggregated and able to interact with each other, as shown in the diagram above. The model container interacts with the web frontend via the backend container to provide the user various machine learning services such as nodule identifications, sizes, and relative distances. The user can interact with the case data container via the backend service to update image data with annotations. The Docker Compose configuration file specifies all of these inter-container dependencies while providing flexibility within each container through key-value pair options. Below, we'll look at some specific options and values for the service container configuration keys one by one.

Postgres¶

The postgres service container has three keys of its own: image, volumes, and environment. The image configuration key tells Docker to use postgres image version 9.6 to build the container. As usual, if the image isn't found cached locally it will be pulled from the Docker Hub, Docker's cloud repository service. Using Docker Hub, we are able to pull a Postgres server right "off the shelf," no need to build the image.

The environment key sets environment variables for the service, in this case the postgres username which is the same username provided to the interface service. And no need remember all the steps for setting up new users– just specify the POSTGRES_USER inside the environment key!

What about the data? It would be really annoying to have to re-fill the local database every single time we restart the Compose configuration. Luckily, Compose provides us a way to declare Docker volumes which it can persist even when a container is not running. That's what this code at the top of local.yml does:

volumes:

postgres_data_dev: {}

And then in the volumes configuration key under the postgres service, we map this postgres_data_dev volume into the container directory where Postgres assumes its data will be stored. Even if we shut down the all of the services, this volume will still be available for next time:

$ docker volume ls

DRIVER VOLUME NAME

local concepttoclinic_postgres_data_dev

Prediction¶

The prediction service container similarly has volume and environment keys. The Concept to Clinic prediction service is implemented as a lightweight Flask application. So the code for the app is mapped to the app volume (along with test images for prediction). Since we are looking at the development configuration file, we set environment variables like FLASK_DEBUG to true.

For the prediction service, instead of an image key we have a build key with a dockerfile option that points to a Dockerfile in the compose directory. A Dockerfile provides Docker with a list of instructions for building a Docker image. These instructions could be issued from the command line one by one, but the Dockerfile automates the process. All relevant Dockerfiles in the Concept to Clinic codebase live in the compose directory. For the prediction service, the development Dockerfile for prediction, Dockerfile-dev, in the ./compose/prediction/ subdirectory installs the requirements needed for running the flask application.

Additionally, there is a ports key in the prediction service configuration that maps the host ports to the application ports so that for a port mapping x:y, the application can be accessed through container port y.

Finally, the command key will execute flask run --host=0.0.0.0 --port=8001, launching the flask app in the appropriate port after the container is configured and running.

Interface¶

The interface service includes every type of key discussed so far as well as the depends_on key which references the other application services, postgres and prediction, that have been set up by the configuration file. The depends_on key tells docker-compose to start up the services in the order provided, before starting the service encompassing the depends_on key. So, in the Concept to Clinic case, docker-compose up interface will start the postgres and prediction services before starting the interface. The interface service is a Django application, which is not as lightweight as flask but much more feature-rich. Note that, besides the depends_on key, certain environment variables such as POSTGRES_USER are set to the same values between services as well.

Vue¶

There's a relatively new vue service in the mix designed to speed up working on frontend Vue.js components, HTML templates, and other JS. This service maps a bunch of host directories with code that can change into the container, which is nice because changes to the files on the host (perhaps open in a text editor or IDE) trigger the webpack hot code reloader inside the container, allowing developers to see changes live reload in their browsers.

Keeping this completely separate from the interface bit, where it could arguably live, just lets us avoid tangling up the Django configuration with Node and NPM solely for dev shortcuts. This container isn't suitable for production — for that we would want to build the assets statically and host them with a production server process — but is a nice quality of life helper for devs working on the frontend.

Conclusion¶

Docker Compose provides a powerful, flexible, and elegant way of developing complex applications that depend on many different services. Even for development that depends on a single service, running docker-compose up and managing configurations in a compose configuration file is often easier than working with the standard Docker command line interface. But it's Docker Compose's ability to orchestrate multiple containers in a fast and reliable way–those locally developed as well as those from the Docker Hub–that make it an obvious choice for the Concept to Clinic challenge.