Open AI Caribbean Challenge¶

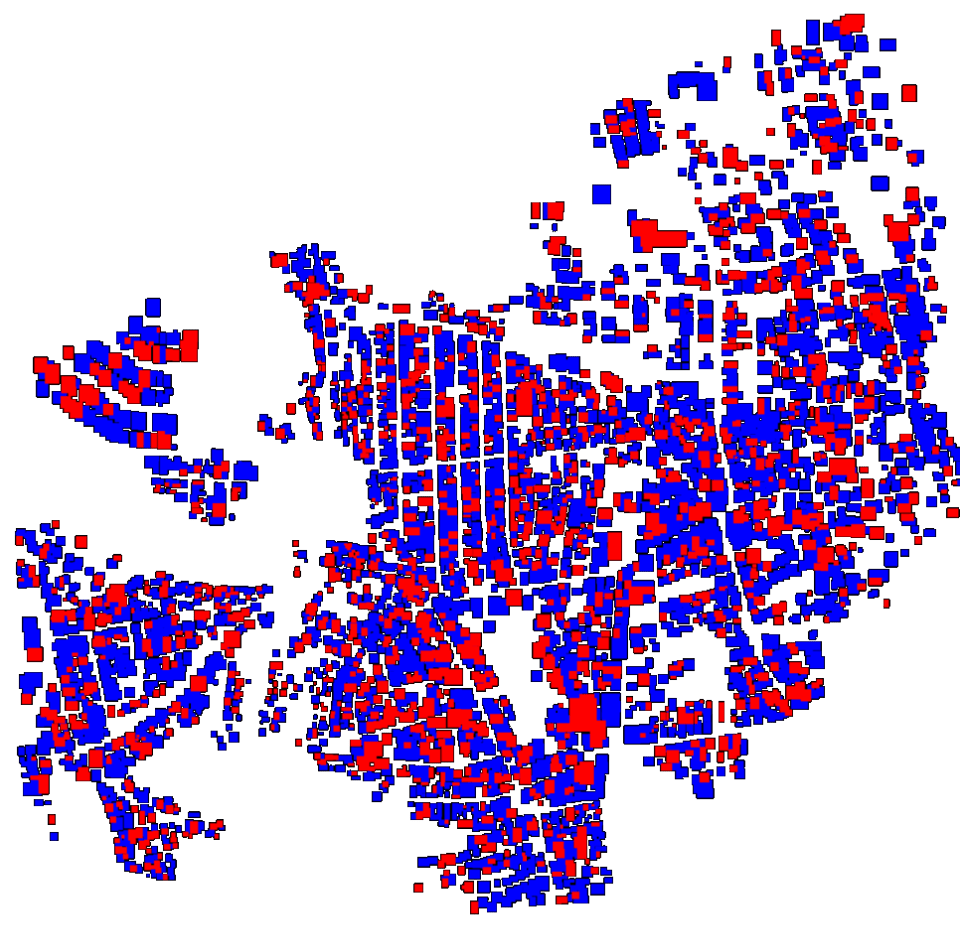

Visualization shared by 2nd place winner @ZFTurbo mapping the rooftop footprints of training (red) and test (blue) buildings in the provided dataset

Natural hazards like earthquakes, hurricanes, and floods can have a devastating impact on the people and communities they affect. Emerging AI tools can help provide huge time and cost savings for critical efforts to identify and protect buildings at greatest risk of damage.

The World Bank Global Program for Resilient Housing and WeRobotics teamed up to prepare aerial drone imagery of buildings across the Caribbean annotated with characteristics that matter to building inspectors. In the Open AI Caribbean Challenge, over 1,400 DrivenDatistas signed up to build models that could most accurately classify roof construction material from aerial imagery in St. Lucia, Guatemala, and Colombia.

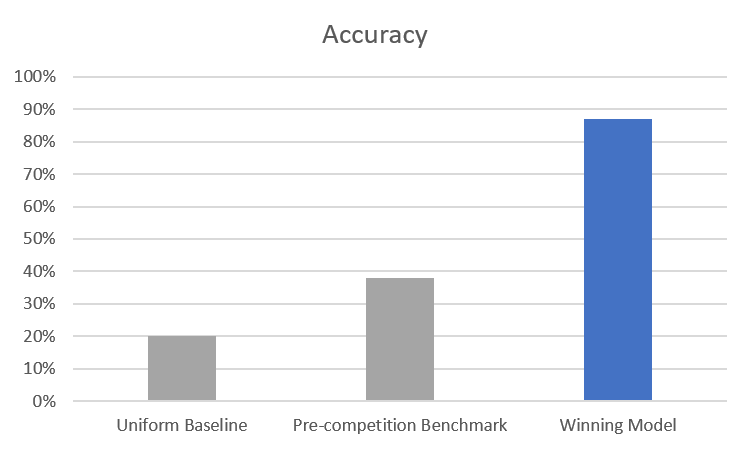

These participants generated more than 2,700 submissions! The models needed to identify among five classes of roofs: health metal, irregular metal (patched, rusted, etc.), concrete/cement, other, and incomplete. The algorithm that topped the leaderboard was able to identify the correct roof type 87% of the time, more than twice the pre-competition benchmark!

Congrats to Andrey and Daniel for creating the winning model. This and all the other prize-winning solutions are linked below and made available under an open source license for anyone to use and learn from. ** Meet the winners below and check out an over-view of how they built the best rooftop classifiers! **

Meet the winners¶

Andrey Poniker and Daniel Fernandez (The team)¶

|

|

Place: 1st

Prize: $5,000

Hometown: Moscow, Russia and Bristol, UK

Username: PermanentPon and DanielFG

Background:

Andrey works as an ML Researcher building new ML features for the recipe sharing platform using recipe texts and image data. Before that Andrey worked as a Data Scientist. He graduated from Moscow Institute of Physics and Technology with a master degree in applied physics and mathematics.

Daniel is a Machine Learning Researcher specialised in Computer Vision and Deep Learning. He very much enjoys facing new challenges, so he spends part of his spare time participating in Machine Learning and Data Science competitions.

Summary of approach:

Our approach is based on a two-layer pipeline, where the first layer is a CNN image classifier and the second one a GBM model adding extra features to the first layer predictions.

The first layer CNNs were trained over a stratified 8 k-folds split scheme. We average the predictions of four slightly different models to improve the accuracy.

The second layer add features from location, polygon characteristics and neighbors. Two models, catboost and lightgbm, are blended to improve final accuracy.

All the features for both models are absolutely the same, so the only difference between these 2 models is the framework which was used to train them.

Check out The team’s full write-up and solution in the competition repo.

Roman Solovyev¶

Place: 2nd

Prize: $2,000

Hometown: Moscow, Russia

Username: ZFTurbo

Background:

My name is Roman Solovyev. I live in Russia, Moscow. I’m Ph.D in microelectronics field. Currently I’m working in Institute of Designing Problems in Microelectronics (part of Russian Academy of Sciences) as Leading Researcher. I often take part in machine learning competitions. I have extensive experience with GBM, Neural Nets and Deep Learning as well as with development of CAD programs in different programming languages (C/C++, Python, TCL/TK, PHP etc).

Summary of approach:

The solution is divided into the following stages:

- Stage 1 [image extraction]: For every house on each map its RGB image is extracted with 100 additional pixels from each side as well as mask based on POLYGON description in GEOJSON format.

- Stage 2 [metadata extraction and found neighbors data]: In the next stage we extract 1) Meta information for each house in train and test dataset and 2) Several statistics on the material of roofs of neighboring houses for a given house. Features from these files used later in the 2nd level models in addition to neural net predictions. It’s needed because neural nets only see the roof image and related polygon. But neural net doesn’t know the location of house on the overall map. Meta features as well as some neighbor statistics helps to make predictions more precise.

- Stage 3 [train different convolutional neural nets]: This is the longest and most time-consuming stage of calculations. At this stage, 7 different models of neural networks are trained, which differ in following: the type of neural network, the number of folds, different set of augmentations, some training procedure differences, different input shape, etc.

- Stage 4 [2nd level models]: Predictions from all neural networks, as well as metadata and data for neighbors obtained in the previous steps are further used as input for second-level models. Since the dimension of the task is small, I used three different GBM modules - LightGBM, XGBoost and CatBoost. Each of them starts several times with random parameters. And the predictions are then averaged. The result of each model is a submit file, in the same format as the Leader Board.

- Stage 5 [Final ensemble of 2nd level models]: Predictions from each GBM classifier then averaged with the same weight. And the final prediction is generated.

Check out ZFTurbo's full write-up and solution in the competition repo.

Stepan Konev and Konstantin Gavrilchik (Roof is on fire)¶

|

|

Place: 3rd

Prize:$1,000

Hometown: Moscow, Russia

Username: sdrnr and gavrilchik

Background:

Stepan: Data Science student at Skoltech, ML Dev at Yandex.

Konstantin: I am graduated from MIPT with a bachelor degree in Applied Math and Physics in 2018. For over 4 years I have worked in several companies as a Data Scientist where I was involved in almost all types of ML problems — classic ML, CV and NLP. Also I really like competitions and I am the Kaggle former 6th.

Summary of approach:

I trained a few models for image classification. Basically I thought that some standard approach should perform well. So we used multiple models and then blended the predictions.

Good image augmentations were pretty useful as usual (I made a few experiments with different compositions of augmentations). Adding DropOut to DenseNet161 increased its performance for this problem.

Check out Roof is on fire’s full write-up and solution in the competition repo.

Ning Xuan¶

Place: MATLAB Bonus Award Winner

Prize:$2,000

Hometown: Los Angeles, CA

Username: nxuan

MathWorks, makers of MATLAB and Simulink software, sponsored this challenge and the bonus award for the top MATLAB user. They also supported participants by providing complimentary software licenses and learning resources. Congrats to nxuan as the top contributor using MATLAB!

Background:

I graduated as a biomedical engineer, and currently employed as an image processing software engineer in a medical startup company located in Los Angeles, California. My job requires me to design computer vision algorithms to automatically analysis and quantify medical X-ray images. Lately, I found that deep learning can perform better computer vision task with higher accuracy and higher robustness. Therefore, I started to invest some of my time to learning deep learning and begin my journey in the deep learning.

Summary of approach:

First I set this problem as a classification problem. The goal to is classify the materials of the roof as more accurate as possible.

For data preparation, I segment out each roofs and save into individual images with their mask. The reason to have a mask file is to let the model focus more on the predicting roof.

For training, I used transfer learning of resnet18, resnet50, and resnet101. The final submission file is the ensembled average of the result from the three models.

Check out nxuan’s full write-up and solution in the competition repo.

Thanks to all the participants and to our winners! Special thanks to MathWorks for enabling this fascinating challenge and to the World Bank Global Program for Resilient Housing and WeRobotics for providing the data to make it possible!