The software development community has centered around Continuous Integration and Continuous Delivery/Deployment as best practices in most development contexts. Many newer developers, particularly at software focused startups, have experienced this as a basic way of doing business. It was not always thus.

The Dark Age of software development¶

In Ye Olden Days, in any significantly large organization, shipping a new version of the organization's software — even a change to one single line of code — was viewed as A Big Deal.

Teams would often set a cutoff date at which point all of the pieces of an application would be expected to interact correctly and then the new version of the software would be built and released. Often, in the lead up to the cutoff date different developers would be working on different pieces of the application without frequently merging their changes. Sometimes, they would be using some form of version control. Rarely, there would be a suite of automated tests to verify that the software was working properly.

There were people whose main job was to integrate all these pieces, build all of the software, and work out any problems that led to "bad builds."

Sound crazy?

In fairness, "releasing" in 2000 might mean physically pressing the new, compiled release onto a CD and then mailing it out to users eligible to receive updates. Compare that to pushing out a new version of a centrally hosted Software as a Service (SaaS) web application.

Even so, this all worked out about as well as you would expect.

Run ALL the tests!¶

As testing and distributed version control became more established cultural norms in the development community, there was a concurrent push towards keeping the mainline branch "in the green," or always passing the test suite. As early as 2000, these mores were sufficiently ingrained to be included in The Joel Test which is a heuristic for outsiders to get a quick feel for how functional a software team is.

Human error is among the most common causes of failure across all industries. Having tests that can run and check the software is great, but relying on humans to remember to do that every time is setting a team up for failure.

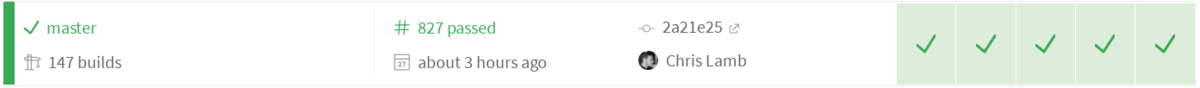

What most teams want is to automate this process using a system that runs all of the tests on all of the branches every time something changes. This way, everybody has a feel for the status of feature branches currently in development, devlopers do their best to integrate changes into the mainline as quickly as possible, and the master version of the software is always in a ready-to-ship posture.

That kind of system is known as Continuous Integration, or CI for short. It has been something of a game changer for quality assurance and change management.

Deploy early, deploy often¶

Here's a sketch of the typical change cycle if the team uses a git feature branch workflow:

- A developer decides to make a change or implement a new feature.

- She checks out a branch from the

HEADcommit on the repository'smasterbranch. - She makes her changes. (This is the hard part.)

- If the master branch has changed in the meantime, she rebases onto the current

HEADto reconcile any discrepancies that may have arisen since she started working. - She then opens a pull request which proposes to merge her changes onto the

masterbranch. - The CI system runs all of the tests, including any new tests the developer added to verify her contributions work as intended. If all the tests pass, the build is marked as passing. If any test fails, the build fails loudly.

- In many organizations, if the build passes then another developer does a code review on this pull request, and may ask questions or suggest changes.

- If the build is passing and the code review is satisfactory, the pull request is accepted and the branch version is now the master version.

Once the team has a master branch that is trusted to be ready-to-ship at all times, the natural next step is to actually go ahead and ship it every time it changes! The same CI system that ran all of the tests can let us deploy that software using whatever automated process is already in place for deployment. (Deployment is automated, right???)

This practice is known as Continuous Deployment (or Continuous Delivery) or "CD" for short. And because most systems do both jobs, they're often referred to as CI/CD or just one of the two.

A brief aside: data science is software, people!¶

At DrivenData, we have been writing and speaking on the topic of reproducible data science for quite a while. We strongly believe that data folks should be adopting the hard-learned lessons of the software industry — and that we ignore them at our peril.

If you are interested in this, check out our Cookiecutter Data Science project which deals with project organization, and check out our PyData 2016 talk called Data science is software (embedded above).

How we used Travis CI with Docker Compose in the Concept to Clinic project¶

Every time a contributor opened or modified a pull request, we wanted the tests to run as described above. Additionally, in the interest of consistency we wanted contributors to adopt a certain PEP8-friendly code style, so we also automatically made successful flake8 and pycodestyle checks a mandatory part of a successful build.

That's a pretty normal use case, but we had a couple of interesting challenges to consider for the CI/CD pipeline.

For one, our project is set up as several services running in Docker containers which are configured and linked together by Docker Compose. Any tool we chose had to be flexible enough to support Docker Compose.

Also, like many data-intensive projects we had a large amount of data that was impractical to track using git, so we were using Github's Large File Storage (LFS) service which provides a familiar pull/push workflow for the project's data. On top of moving data back and forth, some of the tests were computationally intensive so in addition to reasonable network speed and bandwidth limits the tool needed to provide a bit of compute power without timing out or exceeding usage limits.

For our purposes, we chose Travis CI. Travis is a user-friendly and fully featured CI/CD platform that lets you define the entire CI/CD process in a single configuration file. Additionally, they provide their service for free to open source projects which we think is a great way to show appreciation for the OSS community!

Travis let us set this up quite easily by installing Docker and Docker Compose in the before_install section of our .travis.yml, and specify settings for Git LFS to avoid pulling all of the data every time.

Here's the script section of the configuration file, which shows all the steps that must give successful exit code in order for the build to pass:

script:

- flake8 interface

- pycodestyle interface

- flake8 prediction

- pycodestyle prediction

- sh tests/test_docker.sh

The last line is the bash script that tells Docker to run the tests, and has lines like this:

docker-compose -f local.yml run prediction coverage run --branch --omit=/**/dist-packages/*,src/tests/*,/usr/local/bin/pytest /usr/local/bin/pytest -rsx

This line runs the tests for the prediction service and also generates a code coverage report to show which lines of code the tests hit.

After every successful build, we also wanted to trigger a FOSSA license check and then notify the project's Gitter and our internal Slack about build status. Travis made it easy for us to add those webhooks and also put the Slack notification webhook (as well as other secret environment variables) in as encrypted values so that the .travis.yml could remain public without disclosing anything sensitive.

Continuous x ∀ x ∈ {integration,deployment}: an all-around good idea¶

We think CI/CD is a good development practice, and that Travis CI is a great tool for for the job. We're grateful to the Travis team for supporting this competition, and for their extremely quick and helpful support whenever we had questions.

Check them out!